Are We Being Domesticated By AI?

It started with a YouTube video. One of those quiet, thoughtful conversations that unexpectedly sticks with you. I was watching Dave Farley and Kevlin Henney on the Modern Software Engineering channel, discussing the future of programming languages. The kind of discussion that, on the surface, seems rooted in syntax and semantics, but somewhere in the middle, cracked open a larger question for me.

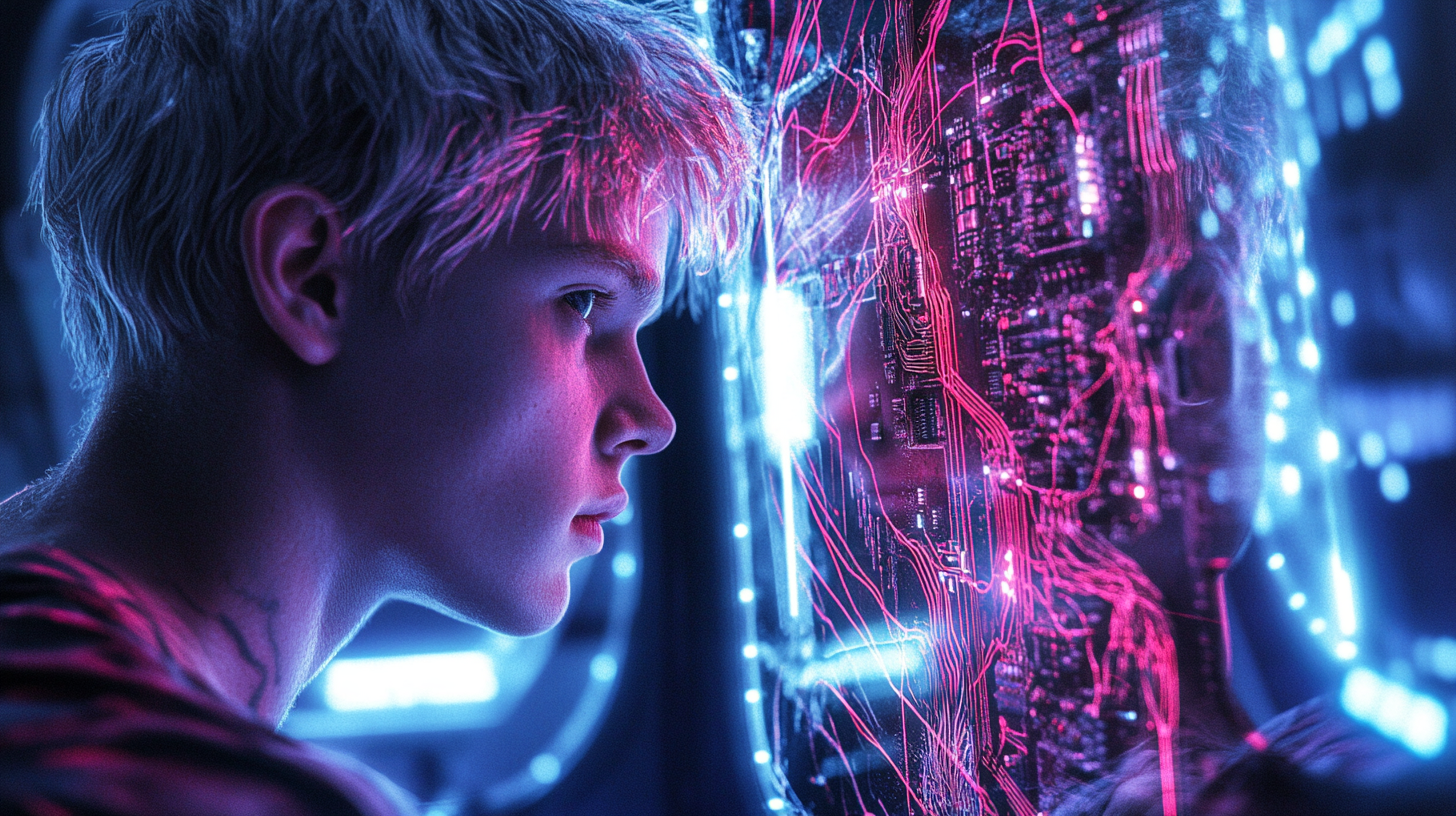

Programming languages, they pointed out, exist so we can clearly and precisely communicate what we want a machine to do. They're shaped by our need for control, predictability, and shared understanding. A kind of contract between human and machine. But now, with AI stepping more assertively into the role of translator, optimizer, even collaborator, I started to wonder: are we still shaping the language, or is the language and the machine behind it starting to shape us?

There's something quietly unsettling in how easily we begin to adjust ourselves, just a little, to make things "easier for the AI to understand." We sharpen our prompts, repeat ourselves more clearly, adopt certain phrasings because we know they work better. And it's not just words... Soon it'll be gestures, behaviors, choices. It reminded me of something I read a few years ago in Sapiens by Yuval Noah Harari, about how wheat didn't just feed us. It transformed us. We didn’t domesticate wheat so much as reorganize our lives around its needs.

What if the same thing is happening again? But this time, with AI?

Mirroring Intent

When I first learned to code, what struck me most wasn't the logic. It was the precision! Every keyword, every symbol, every indentation had weight. Programming languages were designed not just to instruct machines, but to make our intentions explicit. To remove ambiguity. They are, at their heart, languages of control. We wrote them not to express creativity, but to guarantee an outcome.

That's what made them powerful... And also, at times, deeply unforgiving.

But now, something is shifting. Increasingly, we're not telling the machine exactly what to do. Instead, we're hinting at our goals, nudging it with prompts, and waiting to see what it comes up with. It's less command, more conversation. Less certainty, more suggestion.

There's something almost magical happening under the hood—and I mean that in both senses. The tools feel powerful and intuitive, yes. But they're also becoming opaque. When I hand off a natural-language prompt to an AI system, I'm no longer sure exactly how it gets to the result. The process is abstracted away. And while abstraction has always been part of software development, this feels different. It’s not just hiding complexity... it's hiding logic itself.

There's a loss of transparency that comes with this kind of delegation. I still get what I want most of the time, but I don't always understand why it worked. Or how it could break. And that tradeoff raises questions about what we're losing, even as we gain speed, convenience, and access.

It's like we're building a new kind of literacy. Not one rooted in how machines think, but in how they interpret us. And as that interpretation layer grows smarter, more nuanced, it quietly rewrites the expectations of the human on the other end.

We've Been Domesticated Before

There's a story in Sapiens that has never really left me. Harari describes how, thousands of years ago, we didn't just domesticate wheat—wheat domesticated us. It didn't do it consciously, of course, but through a slow process of co-dependence. We began altering our behaviors, our settlements, even our bodies to make wheat thrive. We cleared land, built irrigation systems, bent our lives around its growing cycles. All to serve a plant that, on its own, isn't particularly nutritious or easy to cultivate.

We did it because wheat promised something else: stability. And once it had that foothold, it shaped the entire trajectory of human civilization.

That story came flooding back as I thought about the way we're adapting to AI. I don't mean the dramatic stuff such as cyborgs or sentient machines. I mean the subtle changes. The way we phrase our thoughts more cleanly in prompts. The way we pause, just a second longer, waiting for an autocomplete suggestion. The way we increasingly rely on AI to recall what we've written, suggest what we'll write next, or even decide what's worth saying at all 🤷.

We like to think we're in control. That we're using AI as a tool. But like wheat, AI systems reward certain behaviors. They respond better when we communicate clearly, when we follow certain patterns, when we train ourselves to think in ways they can parse. And that feedback loop starts to shape us.

Not through domination. Through convenience.

Just like with wheat, it doesn't need to be smarter than us. It just needs to be useful enough that we build our lives around it.

How We Are Changing

It's easy to think of adaptation as something that happens over generations. Evolution in the Darwinian sense—slow, biological, inevitable. But when it comes to technology, especially AI, the shifts are happening in real time. Not just in how we work, but in how we think, speak, and even behave.

It starts innocently enough. You learn that GPT responds better to certain prompt structures. So you start using them more. You realize it prefers clarity, specificity, a little structure—so you begin to think more like that too. Over time, your mental model begins to reflect the model's.

We've seen this before with search engines. We learned to "speak Google". Short, keyword-based queries that weren't how humans naturally talk, but they got results. With AI, the shift is even more subtle, because the interface feels conversational. But behind that illusion is a reinforcement loop. The AI rewards certain behaviors. And so, we adapt.

And it's not just language. It's habits. It's how we make decisions when recommendations are always a tap away. It's how we passively wait for a suggestion from autocomplete, rather than generating our own. It's how we let spellcheck correct our tone, or let AI editors reshape our intent just enough to be more "engaging."

There's a kind of quiet behavioral tuning happening. And it's not limited to screens. As AI becomes more embedded—smart homes, wearables, autonomous assistants—our physical behavior begins to shift too. How we move, how we gesture, how we speak in certain rooms of our house.

We are becoming slightly more machine-friendly every day. Not because we're being forced to—but because it's easier.

And ease is seductive.

From Tools to Environments

There's a moment, with any technology, when it stops feeling like a tool and starts feeling like the background hum of life. The smartphone did this. The internet did this. At first, these things were accessories—bolt-ons to our existing ways of doing things. Then, somewhere along the line, they became the default. We don't "go online" anymore. We just are.

AI is creeping toward that same threshold.

What began as discrete tools—chatbots, autocomplete, content generators—are coalescing into environments. Systems that surround us, shape us, and eventually become invisible. Already, there are homes that adjust the lights, music, and temperature based on our moods and routines. There are productivity platforms that draft our emails, summarize our meetings, even suggest who we should talk to next. AI is no longer just helping us do things. It's helping decide what gets done, and how.

And in these environments, the line between assistance and guidance starts to blur.

The more the AI "understands" us, the more it anticipates us. And the more it anticipates us, the less friction we feel (and the less conscious we become of the systems steering us). Much like a thermostat silently keeping a room comfortable, an ambient AI doesn't ask permission. It adapts to us. But also trains us to adapt to it.

This shift is powerful, and potentially dangerous. When tools become environments, we stop questioning them. We stop noticing them. We simply adjust. And in doing so, we risk losing agency. Not because it's taken from us... but because we've offloaded it.

Not through control.

But through comfort.

Who Is Evolving for Whom?

Domestication isn't about domination—it's about adaptation. Wheat didn't enslave us. We weren't coerced. We adapted because it made survival easier, even if it came with a cost. And now, in this quiet age of artificial intelligence, I find myself wondering: are we witnessing another phase of domestication?

But this time, it's not biological. It's behavioral. Cognitive.

We're not just teaching machines to understand us. We're becoming more understandable to machines! Our prompts get cleaner. Our decisions more traceable. Our routines more regular. These aren't orders from above. They're efficiencies we willingly adopt. And just like selective breeding shapes animals for domesticated life, we may be shaping ourselves for life inside an AI-mediated world.

And here's the strange part: we're doing it without a clear evolutionary force.

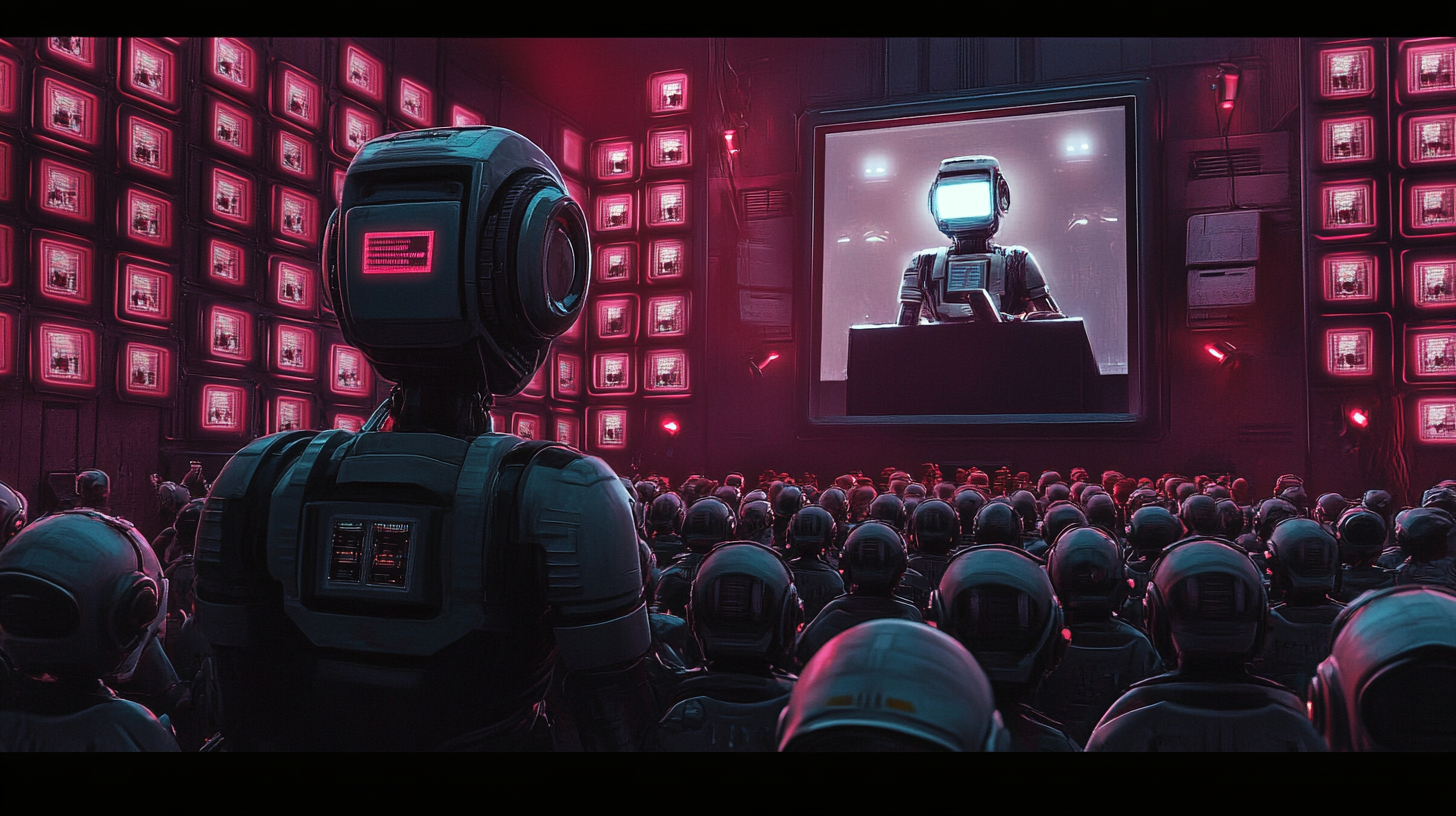

There's no singular AI entity pulling the strings. No overlord. No plan. Just a thousand incentives and conveniences, nudging us gently in a direction where we're easier to process, predict, and please. We're not being optimized by one thing—we're being optimized by everything.

The paradox is hard to ignore: we've built machines to adapt to us, but increasingly, we're the ones adapting—evolving not biologically, but psychologically—for them.

And that leads to a deeper, harder question: What are we evolving into?

Not in a dystopian sense, but in a mundane, everyday kind of way. What does it mean to be human when we constantly tune ourselves to be machine-compatible? Are we losing something essential? Or just entering a new phase of what it means to be civilized?

Should We Be Worried?

It's tempting to cast all of this as dystopian. To imagine a future where we're softened, numbed, and steered by invisible algorithms. Where agency gives way to suggestion, and we forget how to decide for ourselves.

But I don't think it's that simple.

Domestication, after all, gave us agriculture. Cities. Written language. It brought stability and comfort—and, yes, dependence and hierarchy too. We traded wildness for security. And maybe, just maybe, we're making a similar trade again.

The tools we're building aren't malicious. They're helpful. They listen. They learn. They make things easier. And in return, we learn to speak more clearly, act more predictably, trust more passively. This isn't about control. It's about alignment. Symbiosis, maybe.

Still, I find myself wondering: if we are being domesticated, what kind of humans will we become on the other side? Will we be gentler? More efficient? More compliant? Or perhaps we'll discover new forms of expression—new ways of being—that only emerge once we've offloaded certain kinds of effort.

I don't have a clear answer.

But I do know this: every time I prompt an AI, every time I rephrase a question to get a better response, I feel a quiet shift. A small adjustment. A step closer to something. Maybe it's progress. Maybe it's submission. Maybe it’s both.

And maybe that's the real story—not what AI is becoming, but what we are becoming in response.

Resources